Breaking Down Engineering Silos with AI Coding Tools: DevOps

TLDR: I experimented with how Claude Code interacts with cloud platforms like GCP and AWS to speed up generative AI experimentation when PaaS is not an option.

AI coding assistants like Claude Code aren't just transforming how we write code; they're enabling silo bridging between traditionally separated engineering functions. For mobile and frontend engineers who've been focused on their platforms, these tools provide a gateway to understanding infrastructure concepts and handling routine tasks that would otherwise require immediate DevOps assistance.

This isn't about replacing DevOps expertise but enabling collaboration across the full stack. When mobile and frontend engineers understand infrastructure decisions, DevOps engineers can focus on architecture and optimization rather than routine tasks. From my perspective, this makes teams more effective, especially when DevOps designs appropriate guardrails that let other engineers contribute safely without risking production systems.

For the past month, I've been experimenting with an important question: How effectively can AI coding tools help non-DevOps engineers manage cloud infrastructure, including complex tasks like cost optimization? The results have been intriguing enough to share and suggest there's real potential worth exploring further.

What AI Can and Can't Do

To be specific about the silo bridging I'm describing: AI tools show real potential for helping with routine infrastructure tasks like resource provisioning, basic monitoring setup, and cost optimization through configuration adjustments. However, they don't replace the deep expertise needed for designing fault-tolerant systems, implementing security best practices, handling complex networking scenarios, or making architectural decisions that affect system reliability at scale.

Think of it this way: AI tools help application engineers speak enough "infrastructure" to have meaningful conversations and handle day-to-day tasks, while DevOps engineers remain essential for the strategic decisions that keep systems secure, scalable, and resilient.

This infrastructure literacy addresses a common organizational challenge: engineers who don't understand their infrastructure often write code that ignores resource consumption and scaling costs. Minor inefficiencies can become expensive at scale. AI tools help non-DevOps engineers see these connections, creating a culture where everyone considers their code's downstream impact, reducing both DevOps monitoring burden and costly surprises.

The Experiment

I set up Claude Code with AWS and GCP CLI tools and used it to:

Provision infrastructure for various projects (FastAPI apps, Neo4j databases, n8n workflows) using GCP services like Cloud Run, Compute Engine, Cloud SQL, Cloud Secret Manager, and Terraform, plus AWS services like SES for email routing

Troubleshoot deployment issues

Analyze and optimize cloud costs

Configure appropriate resources for proof-of-concept deployments

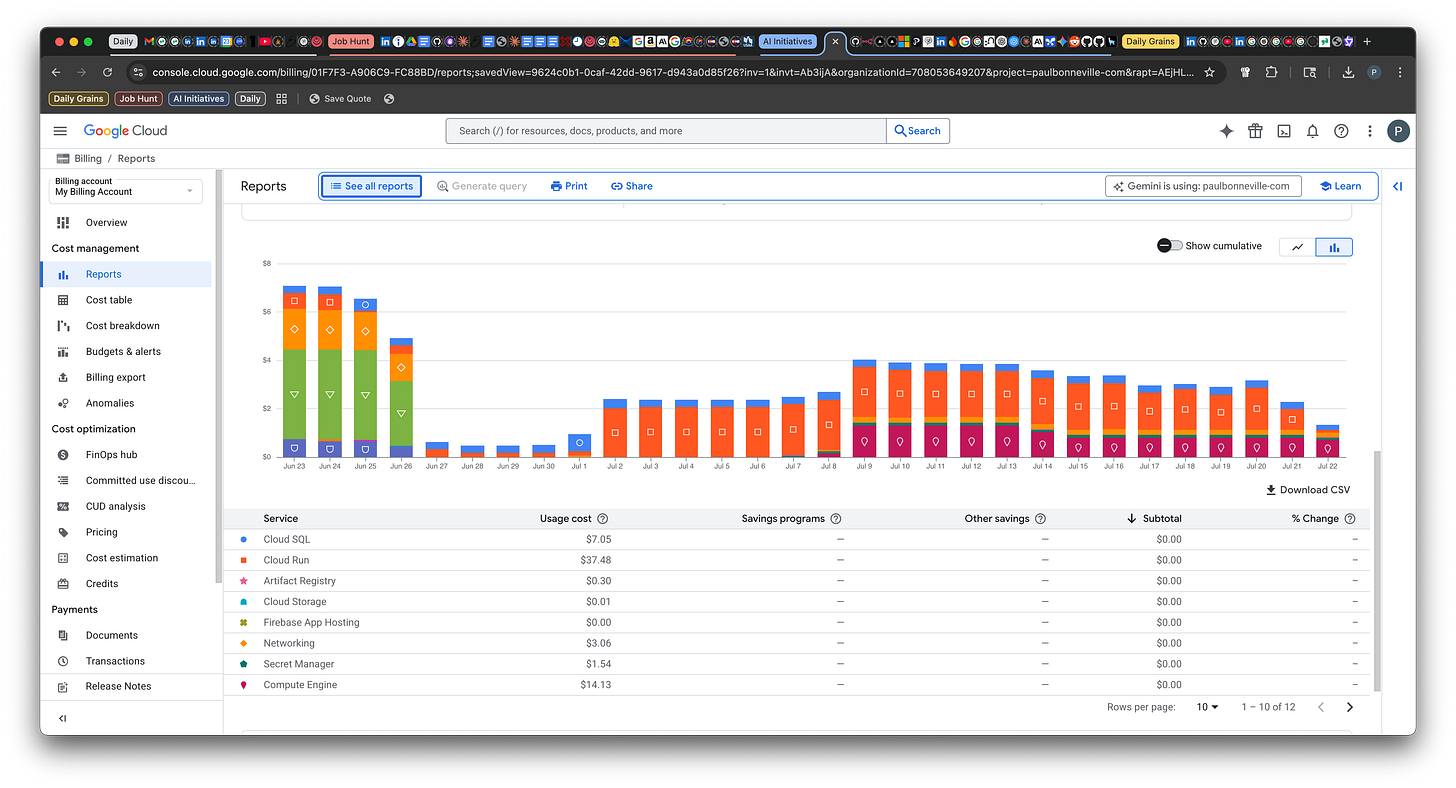

The attached screenshot shows a concrete example: Claude Code helped me identify and resolve a Cloud Run cost issue through iterative resource optimization over just a few days.

These experiences taught me valuable lessons about the current capabilities and limitations of AI-assisted infrastructure management.

Why This Exploration Matters

You might wonder: Why not just use managed services like Render, Heroku, or DigitalOcean? These PaaS offerings are excellent and often the right choice. My goal wasn't to avoid them, but rather to explore how AI tools could help engineers who traditionally work at the application layer understand and manage the infrastructure layer when needed.

This capability becomes valuable for:

Understanding the full stack to make better architectural decisions

Prototyping ideas that require specific infrastructure configurations

Debugging issues that span multiple layers

Contributing to infrastructure discussions with more context

Reducing the communication gap between application and infrastructure teams

From a cost perspective, PaaS solutions work well for focused deployments. However, organizations running hundreds of applications often find that managing their own cloud infrastructure becomes more economical than paying PaaS premiums at scale. For these companies, having engineers who can navigate cloud platforms directly, even without deep DevOps expertise, becomes a significant advantage.

Key Insight

I'm not a DevOps engineer. I know enough to deploy to production, but infrastructure optimization isn't my specialty. Yet with Claude Code, I successfully managed complex infrastructure tasks that would typically require deeper expertise.

This experience revealed something important: AI coding tools aren't replacing DevOps professionals. Instead, they're creating opportunities for better collaboration. When engineers from different domains can understand and contribute to infrastructure decisions, it leads to:

More informed architectural choices

Better communication between teams

DevOps engineers freed up to tackle complex challenges rather than routine tasks

Platform engineers who can prototype and validate ideas independently

The result? I've deployed and optimized multiple open-source applications while significantly reducing costs. This demonstrates how AI coding tools are breaking down silos between engineering functions, platforms, and technology stacks, fostering a more collaborative and efficient development environment.

The Bigger Picture

As AI coding assistants continue to evolve, we're seeing the emergence of more versatile engineers who can work across traditional silos. This doesn't make specialists obsolete; it makes them more valuable. When everyone has basic literacy across domains, specialists can focus on the truly complex problems that require their deep expertise, while routine tasks become accessible to a broader range of team members.

For organizations, this means more flexible teams, faster prototyping, and better cross-functional understanding. For individual engineers, it means the opportunity to break out of traditional silos and become more effective collaborators.

Looking Forward

This month-long experiment revealed something crucial for larger corporations exploring AI: when engineers can rapidly provision and optimize infrastructure without waiting for DevOps cycles, the pace of generative AI experimentation accelerates dramatically. Teams can prototype ideas, test hypotheses, and validate AI use cases faster than ever before. In an era where AI innovation speed matters, breaking down these engineering silos isn't just about efficiency; it's about competitive advantage in the race to implement transformative AI solutions.